POSTS

The Semantic Web, Good for Whom?

On paper, the Semantic Web is a great idea. Structured data means machines can come up with interesting links to various bits of information. Neat idea, but there are few benefits of the Semantic Web to content creators, and it leads to a variety of issues that are non-obvious at the surface.

Microdata is work

While some tools will generate metadata for you, it’s not a given. A human is going to have to spend some time creating or cleaning up the metadata. But time is almost never free. If you mark up the details for a book using schema.org microdata, in what ways does this benefit you or save you time? It depends on what you’re trying to do as a website, and whether or not you’re big enough that the results will be meaningful. If you’re a blogger, doing the markup for a book won’t put you at the front page for a search result of that book (unless there’s fewer than 10 results for a unique keyword, or something like that).

Unless you’re doing a site where the microdata is required for some sort of functionality (a medical site, or some other large institution), there’s generally no need for it.

Microdata leads to page bloat

The search engines that promote verbose metadata standards are the same ones who will penalize you for having large pages or slow speeds (which can happen if you’re trying to generate this metadata on the fly). There should be less emphasis on inline markup, and more on something like:

<link rel="metadata" href="page_metadata.xml">

This would have at least removed the page bloat, and shuffled the machine-readable crap somewhere else. Whether or not the metadata accurately reflects what’s on the page becomes a matter for the interested party to figure out (which they should be doing anyway even with inline markup).

Microdata can be creepy

My experience with XFN seems rather naive given the privacy scandals that now regularly plague many big net companies. We’ve always known there’s bad actors on the web, but the extent of the bad behaviour is what’s alarming. This is something that wasn’t really examined in Which Semantic Web?, but large amounts of metadata can lead to harm, depending on how it’s collected and used.

There’s too many standards

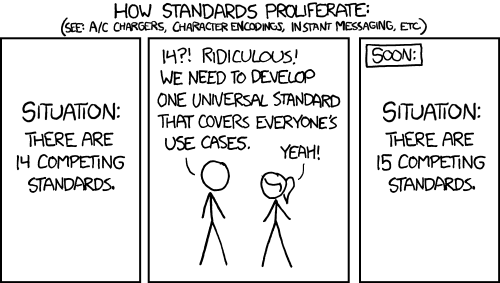

Let’s pretend there’s none of the problems that were just outlined, and there’s tangible public good that comes out of marking up sites. Now you have to pick a way to mark up your site, which feels like the XKCD comic about standards.

Which Semantic Web? hit the nail on the head about this in 2003.

Is there a middle ground?

The only standard I tend to think about these days is the OpenGraph

protocol, and, at least at the time of this writing, is what

this site is using. The benefit to OG is clear - it makes nicer looking links

in sites like Facebook and Twitter. The markup is basic enough that

implementation is trivial, and the monopolies giants of today seem to be

okay with it. A quick example of this markup is:

<meta property="og:title" content="An example page" />

<meta property="og:description" content="An example description" />

<meta property="og:type" content="article" />

<meta property="og:url" content="https://example.org/example-page/" />

There are more properties and tags, but returns diminish quickly. The cost/value of the basic implementation is generally low (a lot of Hugo themes have this code already), it’s using metadata that’s already part of your site anyway, and the page bloat is minimal.